Thoughts on MuSA_RT 2.0

The objective for MuSA_RT 2.0 as a holiday project was to use cutting edge software development tools and frameworks/packages to put MuSA.RT in the hands of anyone with a phone, tablet or computer (limited to the Apple ecosystem because of resource and time constraints). The version currently in the Apple App Store, although quite crude in many respects, achieves this goal, and will serve, time permitting, as a starting point for exciting explorations.

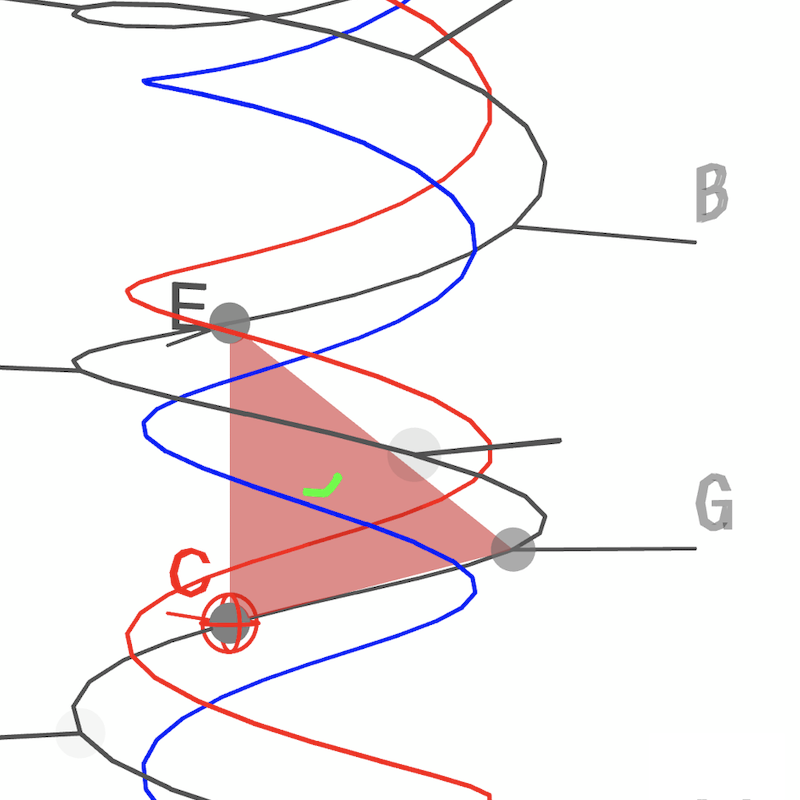

3D graphics and Augmented Reality

Rendering 3D geometry that approximates the original MuSA_RT graphics was almost too easy, and as a result hasn’t yet received the attention it deserves. There is much to explore and improve in terms of geometry and appearance (materials and lighting), and then efficiency.

Similarly, the Augmented Reality (AR) mode is but a bare proof of concept, which only sets the stage for exciting explorations. The first question is of course: what, if anything, can an AR experience of MuSA_RT bring to the performer and to listeners? For example, MuSA_RT has been used as an educational tool to visually support explanations of tonality, both in private and in concert settings. For concerts, the graphics were typically projected on a large screen. What if the model was on stage instead?

Audio processing for music analysis

Efficient Fast Fourier Transform (FFT) computation available on virtually any device with a microphone, and the quasi-ubiquity of microphones in computing devices, from phones to tablets to laptops, made possible an implementation of MuSA_RT that does not require exotic or cumbersome equipment (such as MIDI devices) and can truly run out of anyone’s pocket (provided they have a reasonably recent Apple device). This was an unexpected but in retrospect predictable bonus, which was not part of the initial goals for MuSA_RT 2.0.

Processing unrestricted audio signal from a phone’s microphone to estimate tonal context allows some interesting experiments. In natural contexts, complete silence does not exist, and ambient sounds (light bulbs or refrigerator buzzing, rain falling,npeople talking, birds singing, dogs barking) all contribute to a tonal context, whether musical or not.

In a more focused musical context, the app passes the “Let It Be” test: start the app, put the device on the piano, play the chords - MuSA_RT gets it right (in the key of C major: C G Am F C G F C). MuSA_RT should work with most musical instruments and even with voice. It is worth noting here that the Spiral Array model only accounts for major and minor triads (it does not account for other chords such as 7th’s, etc.) so even though the model tracks the tonal context generated by such chords, it does not recognise/name them.

Playing recorded music from a speaker usually yields disappointing results in terms of chord tracking, for a number of reasons. Of course the quality of the speakers and the microphone will have an impact. The spectrum for music in which drums are a feature tends to be heavily dominated by those drums (they are the loudest). The audio engineers’ magic in modern professional music recording often results in a sound whose spectrum is quite different from that obtained directly from an acoustic musical instrument.

All this to lower expectations, but also to point to the fact that the FFT is probably not the best tool for this type of audio analysis (humans do hear chords over drums, even in modern recordings…). The FFT is undeniably a wonderful tool for audio signal processing, elegant and efficient, and because of that it is widely available and widely used… even when it is not the right tool. Unfortunately, for the moment the FFT remains the easiest (and only?) way to approximate human-like low-level audio signal analysis in an interactive system.